Post-Event Recap: Navigating the New CMMC Era!

The CMMC phased roll-out officially began on November 10, 2025, and the DIB landscape has changed forever.

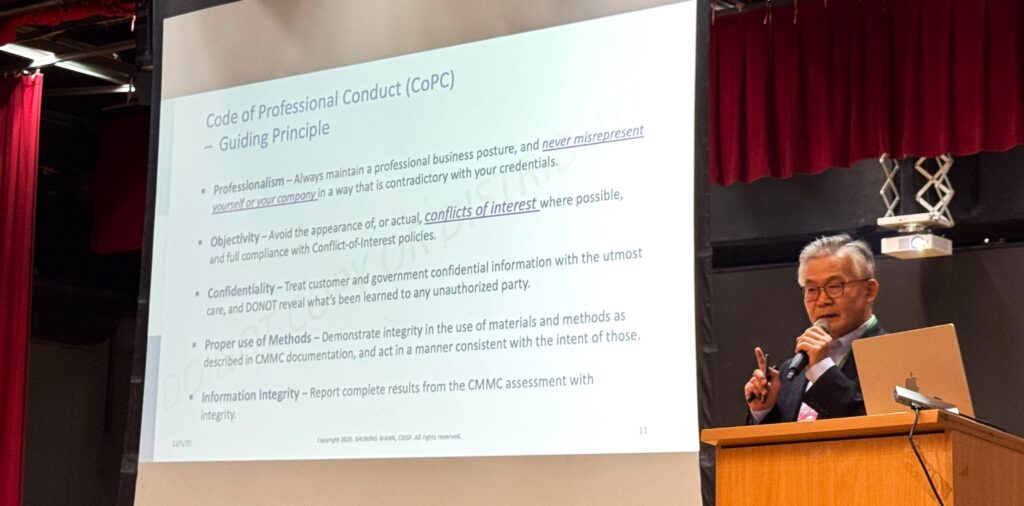

We are incredibly proud to highlight that our Founder & CEO, Mr. Robert Wann, was an invited speaker at a major industry event, where he shared critical insights on the CMMC Final Rules.

In his keynote, Mr. Robert Wann detailed the gravity of the new 32 CFR and 48 CFR CMMC Final Rules and the start of the phased roll-out (beginning Nov 10, 2025). The presentation, “CMMC, the New Era of Cybersecurity Standard,” reinforced that CMMC is not just a contract requirement but the modern security standard set to govern the entire United States.

Key Takeaway: The time to comply is now. CMMC redefines what “good” cybersecurity looks like.

Thank you to everyone who attended! If you missed the session, stay tuned for potential recordings or follow-up articles from Enova as we continue to lead the conversation on compliance and defense.

#CMMC #Cybersecurity #DefenseIndustrialBase #Enova